Author:

(1) David M. Markowitz, Department of Communication, Michigan State University, East Lansing, MI 48824.

Editor's note: This is the final part of a paper evaluating the effectiveness of using generative AI to simplify science communication and enhance public trust in science. You can re-read the rest of the paper via the table of links below.

Table of Links

- Abstract

- The Benefits of Simple Writing

- The Current Work

- Study 1a: Method

- Study 1a: Results

- Study 1b: Method

- Study 1b: Results

- Study 2: Method

- Study 2: Results

- General Discussion, Acknowledgements, and References

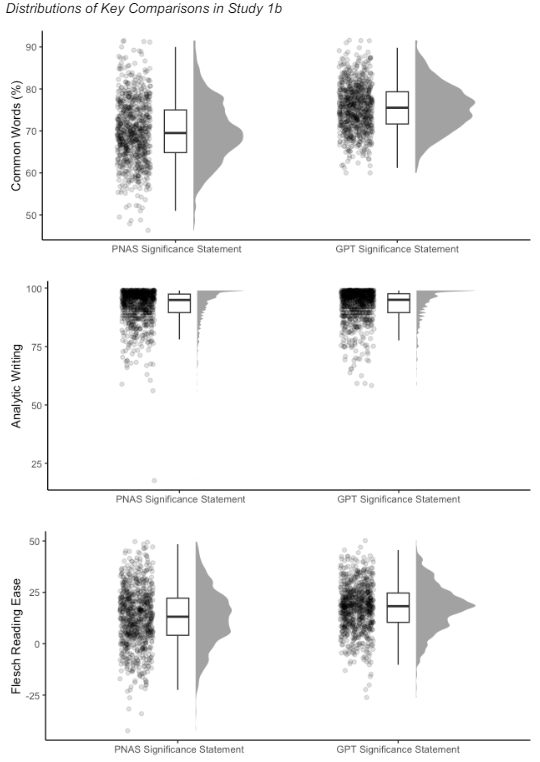

General Discussion

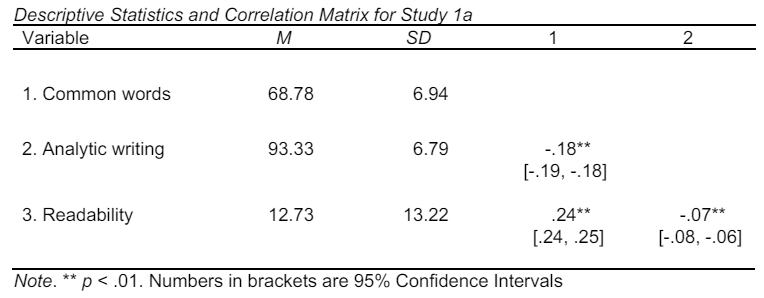

The current work explored the potential of generative AI to simplify scientific communication, enhance public trust in scientists, and increase engagement in the understanding of science. The evidence suggested that while lay summaries from a top general science journal, PNAS, were linguistically simpler than scientific summaries, the degree of difference between these texts could be enlarged and improved. Generative AI assisted in making scientific texts simpler and more approachable compared to the human-written versions of such summaries. Therefore, this paper is notable given current challenges of scientific literacy and the disconnect between scientific communities and the public — AI is indeed better at communicating like a human (or the intentions of writing simply) than humans (42, 48). As prior work suggests, decreasing trust in scientists and scientific institutions, exacerbated by complex communication barriers, call for inventive solutions that are scalable and relatively inexpensive. Those that are offered here, particularly through generative AI, represent one potential pathway toward simpler, more approachable, and improved science communication.

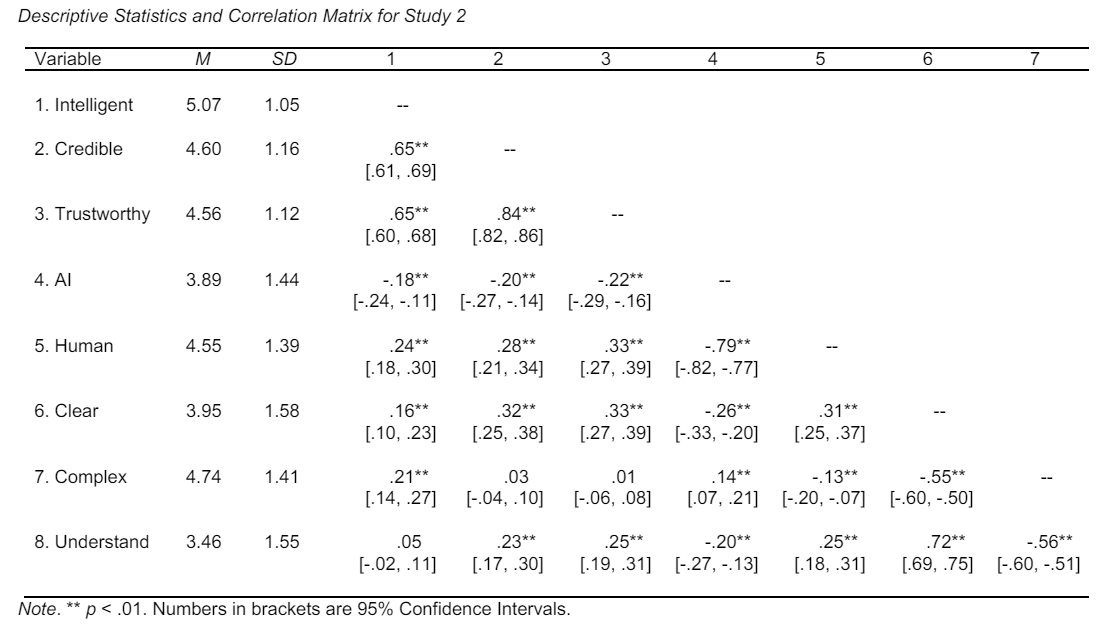

These data build on a body of existing fluency research and provide empirical support for the hypothesis that linguistic simplicity, facilitated by AI, can significantly influence public perceptions of scientists’ credibility, trustworthiness, and intelligence. Generative AI, specifically large language models like GPT-4, can produce scientific summaries that are not only simpler, but also more accessible to lay audiences compared to those written by human experts. These results align with a broader scientific narrative (and interest) that advocates for clearer and more direct communication strategies in science dissemination (49).

The implications of this paper are twofold. First, the results suggest that leveraging AI in scientific communication can bridge scientific communities and the general public. This could be particularly beneficial in a time where science is increasingly central to everyday decision-making but is also viewed with skepticism or deemed inaccessible by non-experts. Second, the increased readability and approachability of AI-generated texts might contribute to a higher engagement with scientific content, thereby cultivating a more informed public.

Despite these positive outcomes and effects, it is important to acknowledge that the simpler-is-better hypothesis was not universally supported (18). While AI-generated summaries were rated higher in terms of credibility and trustworthiness, they were also perceived as less intelligent. This inconsistency underscores the complex interplay between content simplicity and perceived expertise, suggesting that while simpler language can enhance understanding and trust, it might simultaneously reduce perceived intelligence. In science, people may be perceived as smart but untrustworthy and not credible, which suggests a one-size-fits-all model of the relationship between complexity and person-perceptions is perhaps inaccurate.

Future research should aim to examine these dynamics further, potentially exploring how different domains of science (e.g., communicating about health, communicating about climate) might uniquely benefit from AI-mediated communication (50). Studies could investigate the long-term impact of AI-mediated communication strategies on public engagement with science and scientists. Finally, texts from only one journal were used in this paper across studies and therefore, texts from other journals should be used as well. As a general science journal that publishes high-impact research, however, using PNAS for this paper was purposeful and helped to ensure fluency effects were investigated across core domains of scientific inquiry.

Acknowledgements

The author thanks [Redacted] for their thoughts and comments on an earlier draft of this manuscript.

References

-

M. C. Silverstein, et al., Operating on anxiety: Negative affect toward breast cancer and choosing contralateral prophylactic mastectomy. Med Decis Making 43, 152–163 (2023).

-

C. J. Kirchhoff, M. C. Lemos, S. Dessai, Actionable knowledge for environmental decision making: Broadening the usability of climate science. Annual Review of Environment and Resources 38, 393–414 (2013).

-

D. von Winterfeldt, Bridging the gap between science and decision making. Proceedings of the National Academy of Sciences 110, 14055–14061 (2013).

-

R. C. Laugksch, Scientific literacy: A conceptual overview. Science Education 84, 71–94 (2000).

-

Y. Algan, D. Cohen, E. Davoine, M. Foucault, S. Stantcheva, Trust in scientists in times of pandemic: Panel evidence from 12 countries. Proceedings of the National Academy of Sciences 118, e2108576118 (2021).

-

B. Kennedy, A. Tyson, Americans’ trust in scientists, positive views of science continue to decline. Pew Research Center Science & Society (2023). Available at: https://www.pewresearch.org/science/2023/11/14/americans-trust-in-scientists-positiveviews-of-science-continue-to-decline/ [Accessed 25 February 2024].

-

A. Lupia, et al., Trends in US public confidence in science and opportunities for progress. Proceedings of the National Academy of Sciences 121, e2319488121 (2024).

-

S. Vazire, Quality uncertainty erodes trust in science. Collabra: Psychology 3, 1 (2017).

-

H. Song, D. M. Markowitz, S. H. Taylor, Trusting on the shoulders of open giants? Open science increases trust in science for the public and academics. Journal of Communication 72, 497–510 (2022).

-

T. Rosman, M. Bosnjak, H. Silber, J. Koßmann, T. Heycke, Open science and public trust in science: Results from two studies. Public Underst Sci 31, 1046–1062 (2022).

-

J. Beard, M. Wang, Rebuilding public trust in science. Boston University (2023). Available at: https://www.bu.edu/articles/2023/rebuilding-public-trust-in-science/ [Accessed 18 April 2024].

-

S. Martinez-Conde, S. L. Macknik, Finding the plot in science storytelling in hopes of enhancing science communication. Proceedings of the National Academy of Sciences 114, 8127–8129 (2017).

-

A. L. Alter, D. M. Oppenheimer, Uniting the tribes of fluency to form a metacognitive nation. Personality and Social Psychology Review 13, 219–235 (2009).

-

D. M. Oppenheimer, The secret life of fluency. Trends in Cognitive Sciences 12, 237–241 (2008).

-

D. M. Oppenheimer, Consequences of erudite vernacular utilized irrespective of necessity: problems with using long words needlessly. Applied Cognitive Psychology 20, 139–156 (2006).

-

N. Schwarz, “Feelings-as-information theory” in Handbook of Theories of Social Psychology: Volume 1, P. A. M. Van Lange, A. W. Kruglanski, E. T. Higgins, Eds. (SAGE Publications Inc., 2012), pp. 289–308.

-

N. Schwarz, “Metacognition” in APA Handbook of Personality and Social Psychology, Volume 1: Attitudes and Social Cognition, APA handbooks in psychology®., M. Mikulincer, P. R. Shaver, E. Borgida, J. A. Bargh, Eds. (American Psychological Association, 2015), pp. 203–229.

-

D. M. Markowitz, H. C. Shulman, The predictive utility of word familiarity for online engagements and funding. Proceedings of the National Academy of Sciences 118, e2026045118–e2026045118 (2021).

-

H. C. Shulman, M. D. Sweitzer, Advancing framing theory: Designing an equivalency frame to improve political information processing. Human Communication Research 44, 155–175 (2018).

-

M. D. Sweitzer, H. C. Shulman, The effects of metacognition in survey research: Experimental, cross-sectional, and content-analytic evidence. Public Opinion Quarterly 82, 745–768 (2018).

-

T. Rogers, J. Lasky-Fink, Writing for busy readers (Dutton, 2023).

-

H. Song, N. Schwarz, If it’s hard to read, it’s hard to do: Processing fluency affects effort prediction and motivation. Psychol Sci 19, 986–988 (2008).

-

D. M. Markowitz, Instrumental goal activation increases online petition support across languages. Journal of Personality and Social Psychology 124, 1133–1145 (2023).

-

C. K. Chung, J. W. Pennebaker, “The psychological functions of function words” in Social Communication, K. Fiedler, Ed. (Psychology Press, 2007), pp. 343–359.

-

J. W. Pennebaker, The secret life of pronouns: What our words say about us (Bloomsbury Press, 2011).

-

J. W. Pennebaker, C. K. Chung, J. Frazee, G. M. Lavergne, D. I. Beaver, When small words foretell academic success: The case of college admissions essays. PLOS ONE 9, e115844– e115844 (2014).

-

S. Seraj, K. G. Blackburn, J. W. Pennebaker, Language left behind on social media exposes the emotional and cognitive costs of a romantic breakup. Proceedings of the National Academy of Sciences 118, e2017154118–e2017154118 (2021).

-

D. M. Markowitz, Psychological trauma and emotional upheaval as revealed in academic writing: The case of COVID-19. Cognition and Emotion 36, 9–22 (2022).

-

R. Flesch, A new readability yardstick. Journal of Applied Psychology 32, 221–233 (1948).

-

J. Stricker, A. Chasiotis, M. Kerwer, A. Günther, Scientific abstracts and plain language summaries in psychology: A comparison based on readability indices. PLOS ONE 15, e0231160 (2020).

-

I. M. Verma, PNAS Plus: Refining a successful experiment. Proceedings of the National Academy of Sciences 109, 13469–13469 (2012).

-

J. W. Pennebaker, R. L. Boyd, R. J. Booth, A. Ashokkumar, M. E. Francis, Linguistic inquiry and word count: LIWC-22. (2022). Deposited 2022.

-

D. M. Markowitz, J. T. Hancock, Linguistic obfuscation in fraudulent science. Journal of Language and Social Psychology 35, 435–445 (2016).

-

D. M. Markowitz, M. Kouchaki, J. T. Hancock, F. Gino, The deception spiral: Corporate obfuscation leads to perceptions of immorality and cheating behavior. Journal of Language and Social Psychology 40, 277–296 (2021).

-

D. M. Markowitz, What words are worth: National Science Foundation grant abstracts indicate award funding. Journal of Language and Social Psychology 38, 264–282 (2019).

-

Y. R. Tausczik, J. W. Pennebaker, The psychological meaning of words: LIWC and computerized text analysis methods. Journal of Language and Social Psychology 29, 24–54 (2010).

-

R. L. Boyd, A. Ashokkumar, S. Seraj, J. W. Pennebaker, “The development and psychometric properties of LIWC-22” (University of Texas at Austin, 2022).

-

M. E. Ireland, J. W. Pennebaker, Language style matching in writing: Synchrony in essays, correspondence, and poetry. Journal of Personality and Social Psychology 99, 549–571 (2010).

-

K. N. Jordan, J. W. Pennebaker, C. Ehrig, The 2016 U.S. presidential candidates and how people tweeted about them. SAGE Open 8, 215824401879121–215824401879121 (2018).

-

K. N. Jordan, J. Sterling, J. W. Pennebaker, R. L. Boyd, Examining long-term trends in politics and culture through language of political leaders and cultural institutions. Proceedings of the National Academy of Sciences of the United States of America 116, 3476–3481 (2019).

-

K. Benoit, et al., quanteda.textstats: Textual statistics for the quantitative analysis of textual data. (2021). Deposited 24 November 2021.

-

D. M. Markowitz, J. T. Hancock, J. N. Bailenson, Linguistic markers of inherently false AI communication and intentionally false human communication: Evidence from hotel reviews. Journal of Language and Social Psychology 43, 63–82 (2024).

-

C. K. Chung, J. W. Pennebaker, Revealing dimensions of thinking in open-ended self descriptions: An automated meaning extraction method for natural language. Journal of Research in Personality 42, 96–132 (2008).

-

D. M. Markowitz, The meaning extraction method: An approach to evaluate content patterns from large-scale language data. Frontiers in Communication 6, 13–13 (2021).

-

D. Bates, M. Mächler, B. M. Bolker, S. C. Walker, Fitting linear mixed-effects models using lme4. Journal of Statistical Software 67 (2015).

-

A. Kuznetsova, P. B. Brockhoff, R. H. B. Christensen, S. P. Jensen, lmerTest: Tests in linear mixed effects models. (2020). Deposited 23 October 2020.

-

K. Bartoń, MuMIn. (2020). Deposited 2020.

-

M. Jakesch, J. T. Hancock, M. Naaman, Human heuristics for AI-generated language are flawed. Proceedings of the National Academy of Sciences 120, e2208839120 (2023).

-

An act to enhance citizen access to Government information and services by establishing that Government documents issued to the public must be written clearly, and for other purposes. (2010).

-

J. T. Hancock, M. Naaman, K. Levy, AI-Mediated Communication: Definition, research agenda, and ethical considerations. Journal of Computer-Mediated Communication 25, 89– 100 (2020).

This paper is available on arxiv under CC BY 4.0 DEED license.