Future of AD Security: Addressing Limitations and Ethical Concerns in Typographic Attack Research

1 Oct 2025

This paper summarizes a comprehensive framework for typographic attacks, proving their effectiveness and transferability against Vision-LLMs like LLaVA

Empirical Study: Evaluating Typographic Attack Effectiveness Against Vision-LLMs in AD Systems

1 Oct 2025

This article presents an empirical study on the effectiveness and transferability of typographic attacks against major Vision-LLMs using AD-specific datasets.

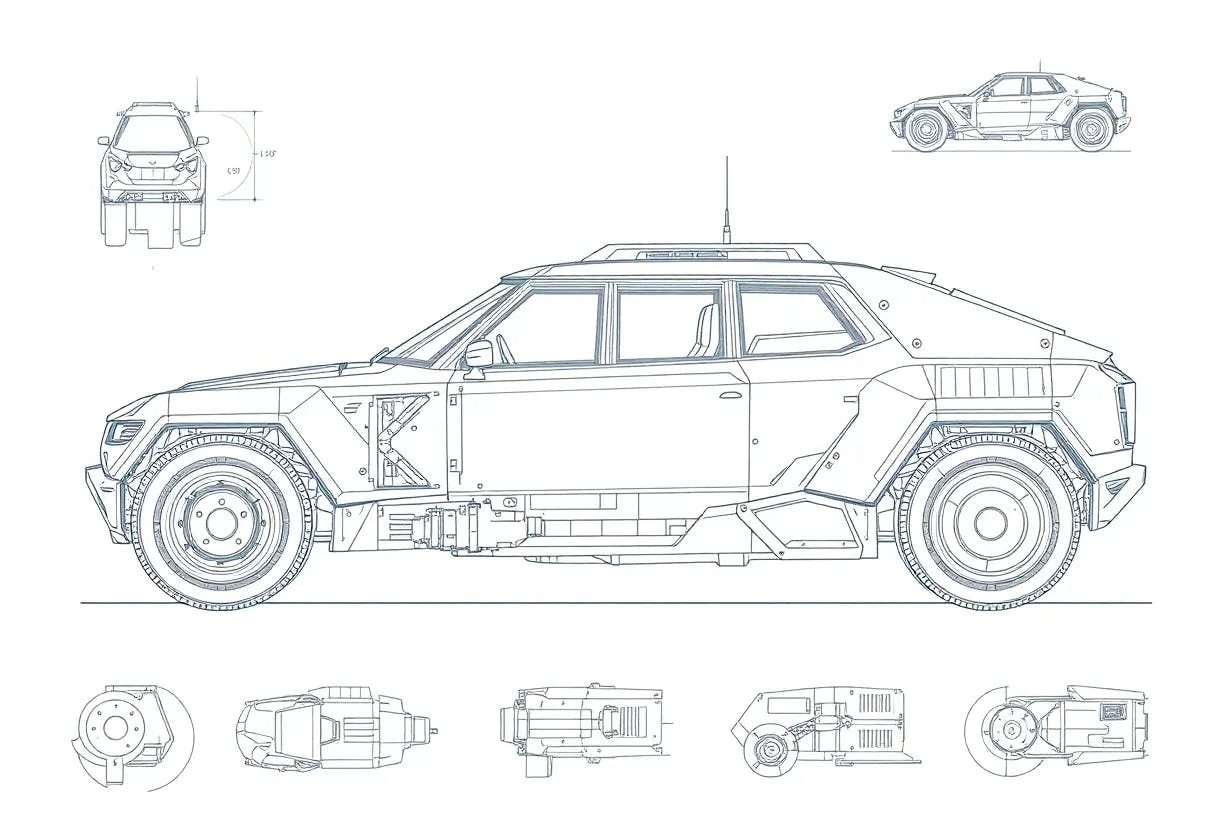

Foreground vs. Background: Analyzing Typographic Attack Placement in Autonomous Driving Systems

1 Oct 2025

This article explores the physical realization of typographic attacks, categorizing their deployment into background and foreground elements

Exploiting Vision-LLM Vulnerability: Enhancing Typographic Attacks with Instructional Directives

30 Sept 2025

This article proposes a linguistic augmentation scheme for typographic attacks using explicit instructional directives.

Methodology for Adversarial Attack Generation: Using Directives to Mislead Vision-LLMs

30 Sept 2025

This article details the multi-step typographic attack pipeline, including Attack Auto-Generation and Attack Augmentation.

The Dual-Edged Sword of Vision-LLMs in AD: Reasoning Capabilities vs. Attack Vulnerabilities

30 Sept 2025

This article analyzes the critical safety trade-off of integrating Vision-LLMs into autonomous driving (AD) systems.

Autoregressive Vision-LLMs: A Simplified Mathematical Formulation

30 Sept 2025

Explaining the role of logits and the softmax function in converting the output vector into a final probability distribution for the next token.

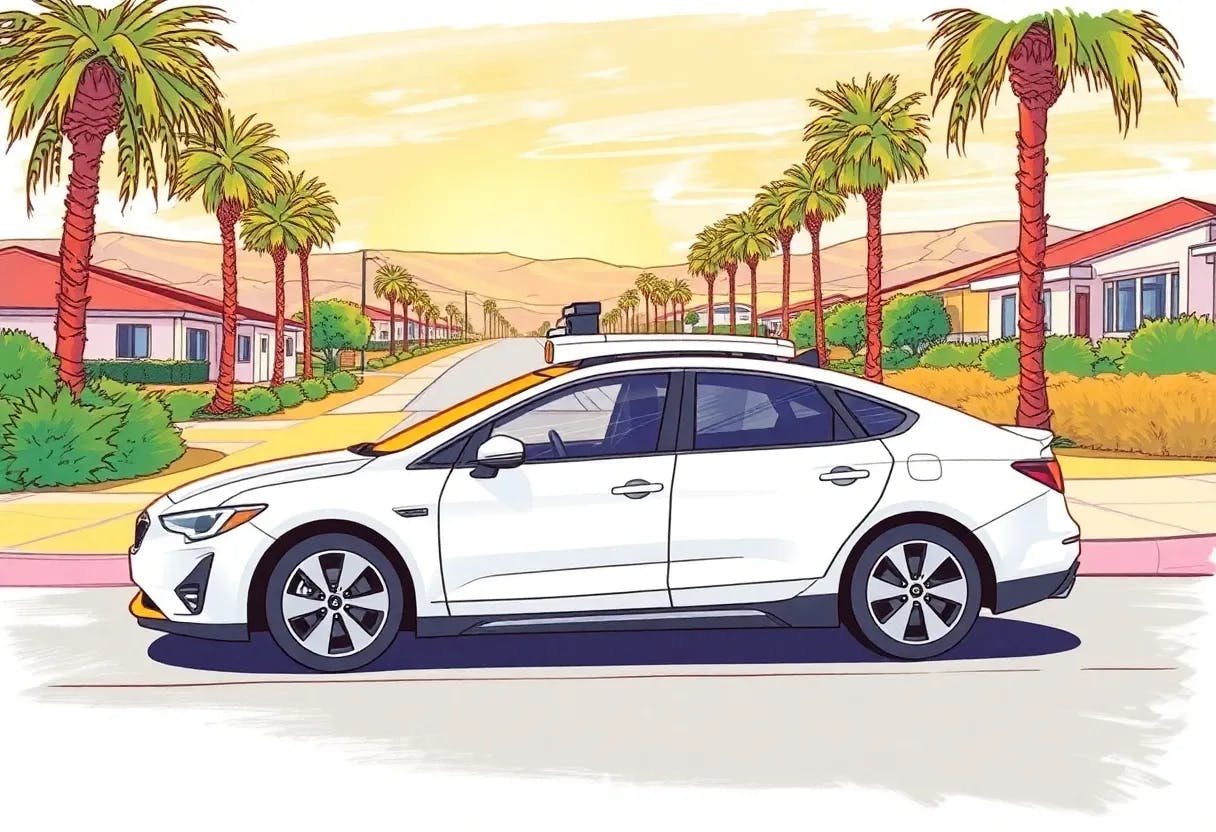

The Vulnerability of Autonomous Driving to Typographic Attacks: Transferability and Realizability

30 Sept 2025

This article reviews and compares two major types of adversarial attacks against neural networks: gradient-based methods (like PGD) and typographic attacks.

The Integration of Vision-LLMs into AD Systems: Capabilities and Challenges

27 Sept 2025

This article reviews the development and application of Vision-Large-Language-Models, focusing on their integration into autonomous driving systems.